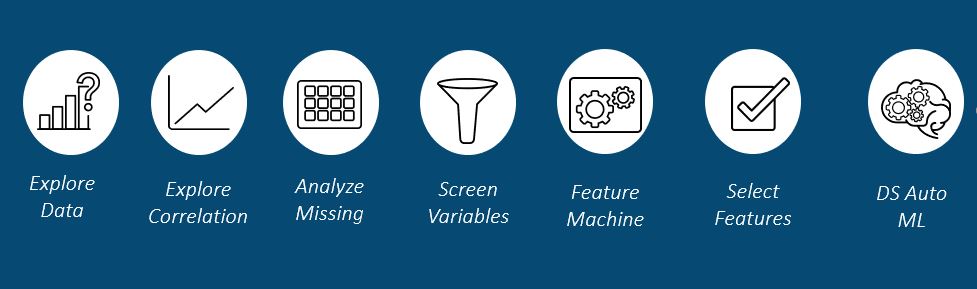

This blog is a part of a series on the Data Science Pilot Action Set. In my first blogs we introduced the action set and the actions for building data understanding, feature generation and feature selection. In this blog we will dive into end-to-end automated machine learning pipelines.

The Data Science Pilot Action Set is included with SAS Visual Data Mining and Machine Learning (VDMML) and consists of actions that implement a policy-based, configurable, and scalable approach to automating data science workflows. The action we will examine in this blog is the dsAutoMl action.

The dsAutoMl action

The dsAutoMl action does it all. It will explore your data, generate features, select features, create models, and autotune the hyper-parameters of those models. This action includes the four policies we have seen in my first two blogs: explorationPolicy, screenPolicy, transformationPolicy, and selectionPolicy. Please review my previous blogs if you need a refresher on the data exploration and cleaning process or feature generation and selection process. The dsAutoMl action builds on our prior discussions through model generation and autotuning. A data scientist can choose to build several models such as decision trees, random forests, gradient boosting models, and neural networks. In addition, the data scientist can control which objective function to optimize for and the number of K-folds to use. The output of the dsAutoMl action includes information about the features generated, information on the model pipelines generated, and an analytic store file for generating the features with new data.

/* Automated machine learning pipelines using the dsAutoMl action on VDMML Version 8.4*/ proc cas; loadactionset "dataSciencePilot"; dataSciencePilot.dsAutoMl / table = "hmeq" target = "BAD" kfolds = 2 explorationPolicy = {cardinality = {lowMediumCutoff = 40}} screenPolicy = {missingPercentThreshold=35} transformationPolicy = {entropy = True, iqv = True, kurtosis = True, Outlier = True} selectionPolicy = {topk=10} modelTypes = {"DECISIONTREE", "GRADBOOST"} objective = "MAE" transformationOut = {name = "TRANSFORMATION_OUT_ML", replace = True} featureOut = {name = "FEATURE_OUT_ML", replace = True} pipelineOut = {name = "PIPELINE_OUT_ML", replace = True} saveState = {name = "ASTORE_OUT_ML", replace = True} ; run; quit; |

Creating and Saving the Best Model in VDMML 8.4

In VDMML 8.4, dsAutoMl does not output an analytic store file for the best performing model pipeline, but we can create one in just a few easy steps. First, we will examine the pipeline file output from dsAutoMl. This file will list the best performing models and the features each model was built on. Next, we will run the feature generation analytic store file on our input data and only keep the best performing features.

/* Creating features */ proc astore; score data=CASUSER.HMEQ out=CASUSER.FEATURES rstore=CASUSER.ASTORE_OUT_ML; run; /* Keeping only the best features */ data casuser.best_features; set casuser.features; keep Your-Best-Features; run; |

Now, we will need to add our target variable back into our data with the new features.

/* Adding our target variable back in */ data casuser.tar; set casuser.hmeq; keep BAD; run; data casuser.base_table; set casuser.best_features; set casuser.tar; run; |

Finally, we create our best performing model. I used the model task in SAS Studio to fill out my model information using the task wizard. Within the task wizard, ensure that you specify to autotune the model and save a scoring model.

Scoring New Data in VDMML 8.4

To score our new data, we must first generate the features and then use our model to make our predictions. Luckily, we have an analytic store file for each. Running the block of code below on our new data will allow us to move from data to prediction in just a few lines of code.

/* Using our model on new data */ proc astore; score data=PUBLIC.HMEQS_1_MONTH1 out=PUBLIC.HMEQS_Feat rstore=CASUSER.ASTORE_OUT_ML; run; proc astore; score data=PUBLIC.HMEQS_Feat out=PUBLIC.HMEQ_NEW_SCORED rstore=CASUSER.GRAD_ASTORE; run; |

Creating and Saving the Best Model in VDMML 8.5

With the latest release of VDMML 8.5 on Viya 3.5, dsAutoMl does generate model astores! Now the process to score new data has gotten much easier. Below, we change the saveState parameters so that it will use the specified prefix to name the resulting astores. We also specify topK to create an astore for only the top performing model.

/* Automated machine learning pipelines using the dsAutoMl action on VDMML Version 8.5*/ proc cas; loadactionset "dataSciencePilot"; dataSciencePilot.dsAutoMl / table = "hmeq" target = "BAD" kfolds = 2 explorationPolicy = {cardinality = {lowMediumCutoff = 40}} screenPolicy = {missingPercentThreshold=35} transformationPolicy = {entropy = True, iqv = True, kurtosis = True, Outlier = True} selectionPolicy = {topk=10} modelTypes = {"DECISIONTREE", "GRADBOOST"} objective = "MAE" transformationOut = {name = "TRANSFORMATION_OUT_ML", replace = True} featureOut = {name = "FEATURE_OUT_ML", replace = True} pipelineOut = {name = "PIPELINE_OUT_ML", replace = True} saveState = {modelNamePrefix = "ASTORE_OUT_ML", replace = True, topK=1} ; run; quit; /* Using our model on new data */ proc astore; score data=PUBLIC.HMEQS_1_MONTH1 out=PUBLIC.HMEQS_Feat rstore=CASUSER.ASTORE_OUT_ML_FM_; run; proc astore; score data=PUBLIC.HMEQS_Feat out=PUBLIC.HMEQ_NEW_SCORED rstore=CASUSER.ASTORE_OUT_ML_GRADBOOST_1; run; |

Conclusion

The dsAutoMl action is all that and a bag of chips! In this blog, we took over all aspects of the data science workflow using just one action. But wait, there's more! In my first three blogs we focused solely on the SAS programming language. In my next blog, I will go through this entire process, but in Python.

2 Comments

can I use these cas actions in Sas Viya 3.4 or do I have to wait for Sas Viya 3.5?

You can use these actions on Viya 3.4.